Well, I guess it would be truer to say that computers, by default, don’t know a phoneme from a salad fork!

Sorry for forgetting to mention CLTS (there is at least a short mention here). It must have been a crazy amount of work. ![]()

Features  Graphemes

Graphemes

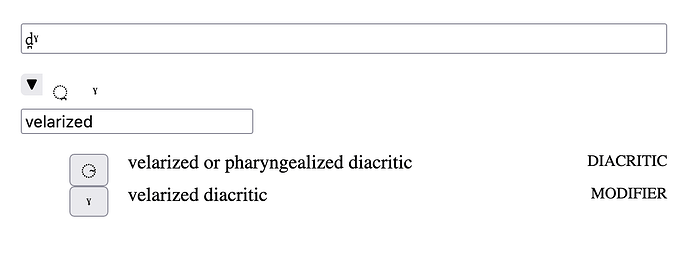

From my experience in (air-conditioned) fieldwork, a primary difficulty in early days of a project is input. And not just the questions of “where is my character?” “how can I input characters efficiently?”. Both of those are challenging, but the one that I think is the most challenging (and most frequent) is “I heard a sound which I believe has features x, y, and z. Which IPA graphemes do I need?” So for instance, you might say, “that thing sounded like a voiced dental stop «d̪ » . But if it also sounds velarized…”

Then you have to remember that “velarized” maps to «ˠ», superscript gamma.

UIs

A database like CLTS is going to be the solution to working with featural phonetic encodings, but there are a lot of user interface questions related to how we actually access and insert that information in a transcription.

I built a sort of feature-based-button-panel thingy in the context of my dissertation, it looks like this:

Online here:

https://docling.net/book/docling/ipa/ipa-input/ipa-input-sample.html

There is plenty of room for experimentation with interfaces like this. Various UI tools for search and input could be designed. So the tool above for instance should be rewritten with BIPA data as the source, but it needs (familiar) feature names for everything. I’m going take a closer look at the source and see if perhaps it’s already there.

This particular topic in digital documentary data always makes me dizzy. So much detail!

![]()