I’ve just spent way too much time messing around with Wikidata.org:

This project is… big. But it’s interesting to think about how it might be helpful for linguists.

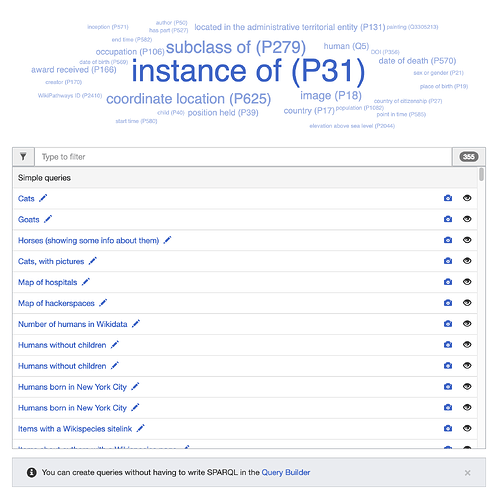

Wikidata uses a query language called SPARQL, which has quite a learning curve. I had to follow along in several tutorials before I felt like I had any inkling of what was going on. I think the best first step is to just try running some example queries. There are several examples available in the button at the top of the query interface:

- A lot of cat pictures

- A rather interesting dynamic dictionary (There’s a whole category of example searches having to do with “lexemes”, definitely worth more investigation.)

- Another interesting tool that could be used for fieldwork: a dynamic picture dictionary. The trick here would be to figure out how to intersect this kind of query with something else (say, wildlife of a particular region)

And so forth.

Here’s an example of one I cobbled together from examples. It maps the birthplaces of female linguists in Wikipedia:

#Find the birth place of all female linguists

#defaultView:Map

SELECT ?item ?itemLabel ?place ?coord

WHERE {

?item wdt:P31 wd:Q5 . # is a human

?item wdt:P106 wd:Q14467526 . # is a linguist

?item wdt:P21 wd:Q6581072 . # is a female

?item wdt:P19 ?place.

?place wdt:P625 ?coord.

SERVICE wikibase:label { bd:serviceParam wikibase:language "nl,en,fr,de,es,it,no" }

}

You can try running it yourself if you like.

Like I said, the query language not user-friendly at all. But the output is!

Obvious and disappointing bias there, but still, 1681 female linguists, that’s a lot. Of course if you can master SPARQL you could do a zillion other things.

One more quick example:

SELECT ?language ?languageLabel ?speakers WHERE {

?language wdt:P31 wd:Q34770 ;

wdt:P17 wd:Q1033 ;

wdt:P1098 ?speakers

SERVICE wikibase:label { bd:serviceParam wikibase:language "[AUTO_LANGUAGE],en". }

}

ORDER BY DESC(?speakers)

This plots the languages of Nigeria ordered by number of speakers.

Is it reliable? Well, as reliable as Wikipedia is, I guess. But where else could one get such data so immediately, and in such a convenient form? (You can download a JSON or CSV file with one click!)

Anyway, I thought I’d start this topic so we could use it as a place to figure out more about this resource.