Over in Flextext to Plaintext, @rgriscom shared his cool code for converting a flextext file to plain text (I just learned that apparently some people also think that ‘plaintext’ means something else, but those people are clearly wrong ![]() ).

).

This got me thinking about a topic that we might want to discuss here. As Richard mentioned, I often ramble on about something called JSON , but without really getting into what it is. (We will get into it a little next Monday!)

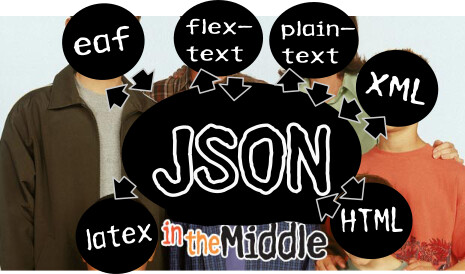

Here I would like to introduce an idea that might help to explain why JSON is so great. Let’s call it JSON in the Middle.

I’m way too old to be referencing Malcolm in the Middle. Am I cool yet?

The reality of our field is that we have a lot of file formats. Converting between formats is just a fact of our documentary lives. @rgriscom’s code addresses the following scenario:

[graphviz]

digraph {

rankdir=LR;

flextext → plaintext

}

[/graphviz]

Exactly what kind of plaintext one might want could vary in different circumstances (which tiers? how much spacing? etc), but that is the problem that @richard had, and he solved it.

In fact, we do this sort of thing in lots of other circumstances too. Like:

[graphviz]

digraph {

rankdir=LR;

eaf → HTML

}

[/graphviz]

Among many other features, LingView is a tool that carries out this conversion. (Come to think of it, it actually does:

[graphviz]

digraph {

rankdir=LR;

flextext → HTML

eaf → HTML

}

[/graphviz]

I once worked on a project with Brad McDonnell that did:

[graphviz]

digraph {

rankdir=LR;

toolbox → latex

}

[/graphviz]

You get the idea. People convert from one format to another all the time.

Two things

But there are actually two things happening in what we call “conversion”:

- Import from a format

- Export to some other format.

If we write code to convert N input formats to M output formats, we are going to end up writing M * N programs. It’s probably not the case that we always want some import format to go to all other export formats, but let’s just imagine we want to for the sake of argument. Just in the examples above, we’ve mentioned flextext, plaintext, eaf, HTML, toolbox, and latex. That’s six… do we need 62 programs for conversion? When we add some other format, that’s 72 programs… that gets yikes real quick. ![]()

(By the way, some formats suck as import formats: I’m looking at you LaTeX… ![]() )

)

What’s the alternative?

We’re sort of already creating JSON anyway

When you write a conversion program, what do you actually do? You “parse” the content of the input format, which is to say, you go through the content in some way (by using string parsing methods, say, or a more abstract tool like an XML parser), and then you end up with “native” data structures in the programming language you’re working with. Then, you take that data structure, and you go through it and generate the output format, usually writing it to a file.

But here’s the thing: in the “data format” stage of that process, what you’re dealing with is going to be pretty dang close to JSON anyway. The main feature of JSON is that it’s an easy way to write down objects and arrays. The names for the different structures differ from language to language, but there is almost always something similar:

| Language | Object | Array |

|---|---|---|

| Javascript | Object | Array |

| Python | Dictionary | List |

| Ruby | Hash | Array |

| R | Something | Something else* |

* R hates me, somebody tell me the equivalent?

JSON stands for “Javascript Object Notation”, but that name doesn’t really reflect the way it’s used now. It’s really more like “generic data language”, because basically every language supports it.

No seriously, so many languages.

8th, ActionScript, Ada, AdvPL, APL, ASP, AWK, BlitzMax, C, C++, C#, Clojure, Cobol, ColdFusion, D, Dart, Delphi, E, Fantom, FileMaker, Fortran, Go, Groovy, Haskell, Java, JavaScript, LabVIEW, Lisp, LiveCode, LotusScript, Lua, M, Matlab, Net.Data, Nim, Objective C, OCaml, PascalScript, Perl, Photoshop, PHP, PicoLisp, Pike, PL/SQL, Prolog, PureBasic, Puredata, Python, R, Racket, Rebol, RPG, Rust, Ruby, Scala, Scheme, Shell, Squeak, Tcl, Visual Basic, Visual FoxProJSON in the Middle

The alternative is to embrace the fact that JSON is so universal, and use it as the endpoint of “importing” and the starting point of “exporting”. Then you end up with a graph like this:

[graphviz engine=dot]

digraph {

rankdir=LR

subgraph cluster1 {

toolboxIn [label=toolbox]

flextextIn [label=flextext]

eafIn [label=eaf]

HTMLIn [label=html]

XMLIn [label=xml]

}

subgraph cluster2 {

peripheries=0

json [label=JSON]

}

subgraph cluster3 {

toolboxOut [label=toolbox]

latexOut [label=latex]

flextextOut [label=flextext]

eafOut [label=eaf]

HTMLOut [label=html]

XMLOut [label=xml]

}

toolboxIn → json

flextextIn → json

eafIn → json

HTMLIn → json

XMLIn → json

json → toolboxOut

json → latexOut

json → flextextOut

json → eafOut

json → HTMLOut

json → XMLOut

}

[/graphviz]

There are so many advantages to this world. Any one of those arrows could be written in any programming language, for instance. If our community has expertise in the form of someone who knows Haskell, well, great! How easy that particular piece of code will be for the average documentary linguist to run is another question, but there’s no reason to rule that language out, if the result is a format that “swims downstream” — that is to say, it exports or imports JSON in the standardized flavor.

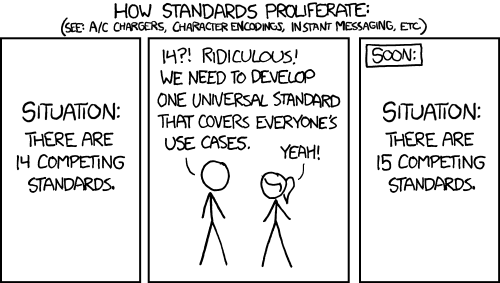

Okay, but what flavor of JSON?

Did I just say “standardized”? But…

The difference between what I’m proposing here and the XKCD scenario is that this putative JSON “flavor” would encompass something very close to the kind of data we’re already dealing with in documentation, but it would encompass all of the basic data types. That means, all three parts of the Boasian trilogy. If we can do that, then we have a “lingua franca” JSON flavor, so that all these various conversion programs will have a well-defined starting point.

So, I’m going to be bold here and just throw in a kitchen-sink (but small) example that shows my own opinion of the things that we have to have if we’re going to encode a documentary database as JSON. If you click the arrow below, be warned, you’re going to see a bunch of stuff — if you’re not familiar with JSON it may seem a little kookytimes. But try looking through it anyway. You might have more success looking at what lies behind the JSON example…

A very simple JSON “Boasian database”

{

"language": {

"metadata": {

"name": "Esperanto",

"codes": {

"glottocode": "espe1235",

"iso639": "epo"

},

"notes": [

"This is (very) simple example of a JSON structure that contains a corpus, lexicon, and grammar."

]

}

},

"corpus": {

"metadata": {

"title": "A tiny Esperanto corpus"

},

"texts": [

{

"metadata": {

"title": "Hello"

},

"sentences": [

{

"transcription": "Mia nomo estas Pat.",

"translation": "My name is Pat.",

"words": [

{

"form": "mi-a",

"gloss": "ego-ADJ"

},

{

"form": "nom-o",

"gloss": "name-1.SG"

},

{

"form": "est-as",

"gloss": "to_be-PRES"

},

{

"form": "Pat",

"gloss": "Pat"

}

]

}

]

},

{

"metadata": {

"title": "Advice"

},

"sentences": [

{

"transcription": "Amikon montras malfeliĉo.",

"translation": "A friend shows in misfortune.",

"words": [

{

"form": "amik-o-n",

"gloss": "friend-N-ACC"

},

{

"form": "montr-as",

"gloss": "show-PRES"

},

{

"form": "malfeliĉ-o",

"gloss": "misfortune-N"

}

]

}

]

}

]

},

"lexicon": {

"metadata": {

"title": "Lexicon derived from corpus."

},

"words": [

{

"form": "amik-o-n",

"gloss": "friend-N-ACC"

},

{

"form": "est-as",

"gloss": "to_be-PRES"

},

{

"form": "malfeliĉ-o",

"gloss": "misfortune-N"

},

{

"form": "mi-a",

"gloss": "ego-ADJ"

},

{

"form": "montr-as",

"gloss": "show-PRES"

},

{

"form": "nom-o",

"gloss": "name-1.SG"

},

{

"form": "Pat",

"gloss": "Pat"

}

]

},

"grammar": {

"metadata": {

"title": "Esperanto grammatical category index."

},

"categories": [

{

"category": "pos",

"value": "adj",

"symbol": "ADJ"

},

{

"category": "person",

"value": "first",

"symbol": "1"

},

{

"category": "number",

"value": "singular",

"symbol": "SG"

},

{

"category": "tense",

"value": "present",

"symbol": "PRES"

},

{

"category": "pos",

"value": "noun",

"symbol": "N"

},

{

"category": "case",

"value": "accusative",

"symbol": "ACC"

}

]

}

}

Below is another presentation of the same data. Unfortunately I can’t control how tables are formatted (without work I don’t have time to do!) in this forum, so I’ll just let you take a look as-is. Hopefully you’ll be able to glean some idea of the way this structure contains a corpus, a lexicon, and a very, very bare representation of “grammar”.

Tabular demonstration of a JSON “Boasian database”

| language |

|

||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| corpus |

|

||||||||||||||||||||||||||||||||||||||||||||||

| lexicon |

|

||||||||||||||||||||||||||||||||||||||||||||||

| grammar |

|

Also, if you feel like, it, you can try messing around with that JSON/tabulation thing interactively here.

I’ll stop here but I’m hoping this is of some interest to some people (especially my committee, since this sort of thing is what my dissertation is about!! ![]() )

)